AI Generated Content

There has been a number of notable and interesting developments in the world of AI. Video generation has become incredibly high quality. AI video games have been getting released. Then there are also innovations in cost reduction of training text-to-image models. Let's get into it.

Sora

Text-to-video by OpenAI

Sora, which is not generally available just yet, can generate videos up to a minute long while maintaining visual quality and adherence to the user’s prompt. The quality is much better than what else has been seen by text-to-video models previously.

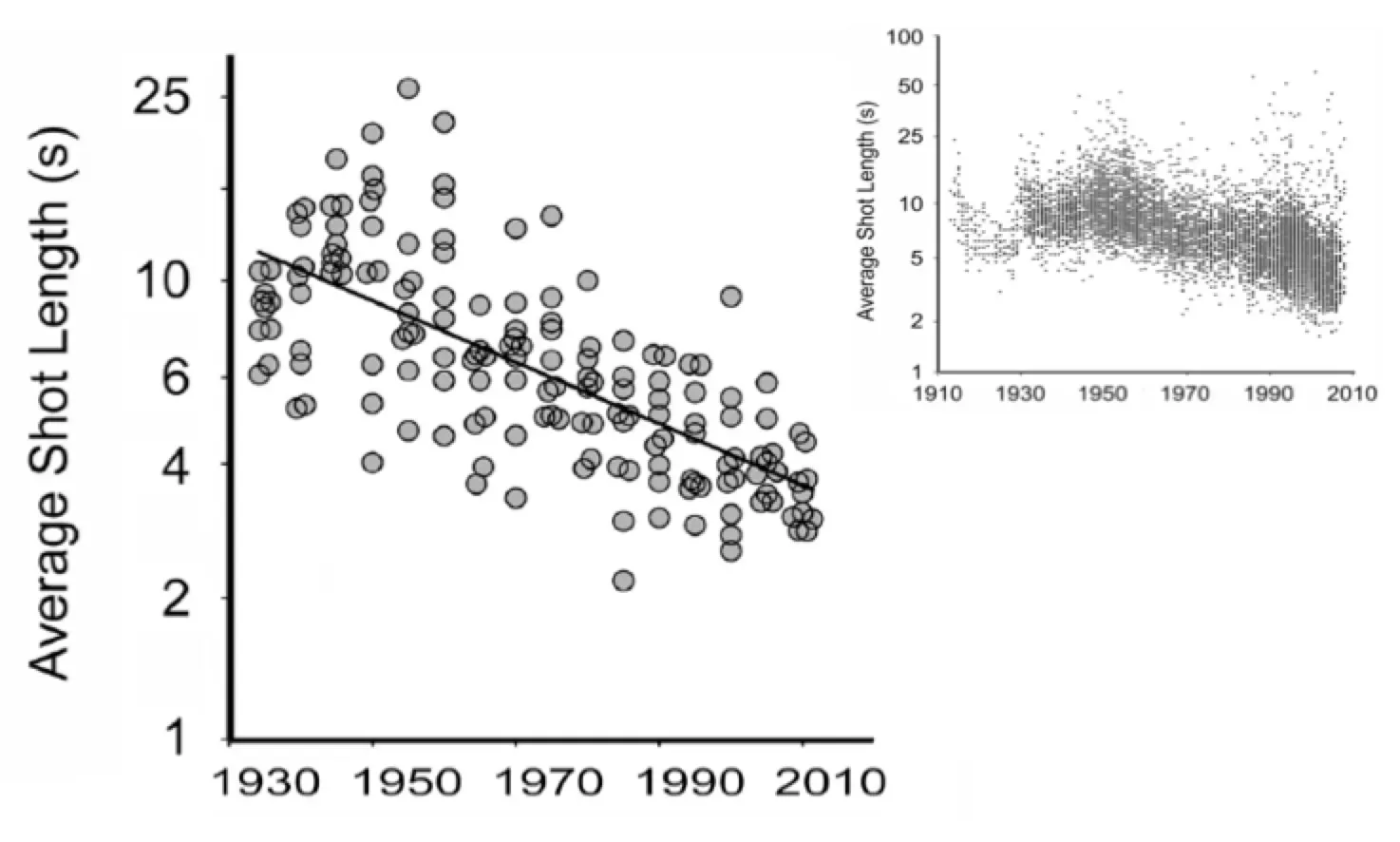

It's worth mentioning that the average shot length within a modern movie is seconds long. So a minute of generated video, at this quality, is likely to have a long lasting impact on the world of video content going forward.

Sora can combine videos to create fantastical transitions that are somewhat mind altering in trying to follow and understand.

However, it doesn't do everything well. It can mess up in ways that are physically impossible.

I look forward to seeing what content creators can make in the future. Even without access to something like Sora, there are other tools content creators have been experimenting with to make short films and videos.

New AI Short Film📽️

— Next on Now (@next_on_now) February 22, 2024

"DOG DAY"

Everyone needs a dog day once in a while...

TOOLS USED: @runwayml@midjourney@elevenlabsio pic.twitter.com/SZF7qo4IsN

Palworld

A game that is basically Pokemon - but with guns!

Pocketpair released Palworld that became an overnight success and Steam sales alone have driven a revenue of more than $200 million since its launch.

This is a very commercially successful game for a very small team.

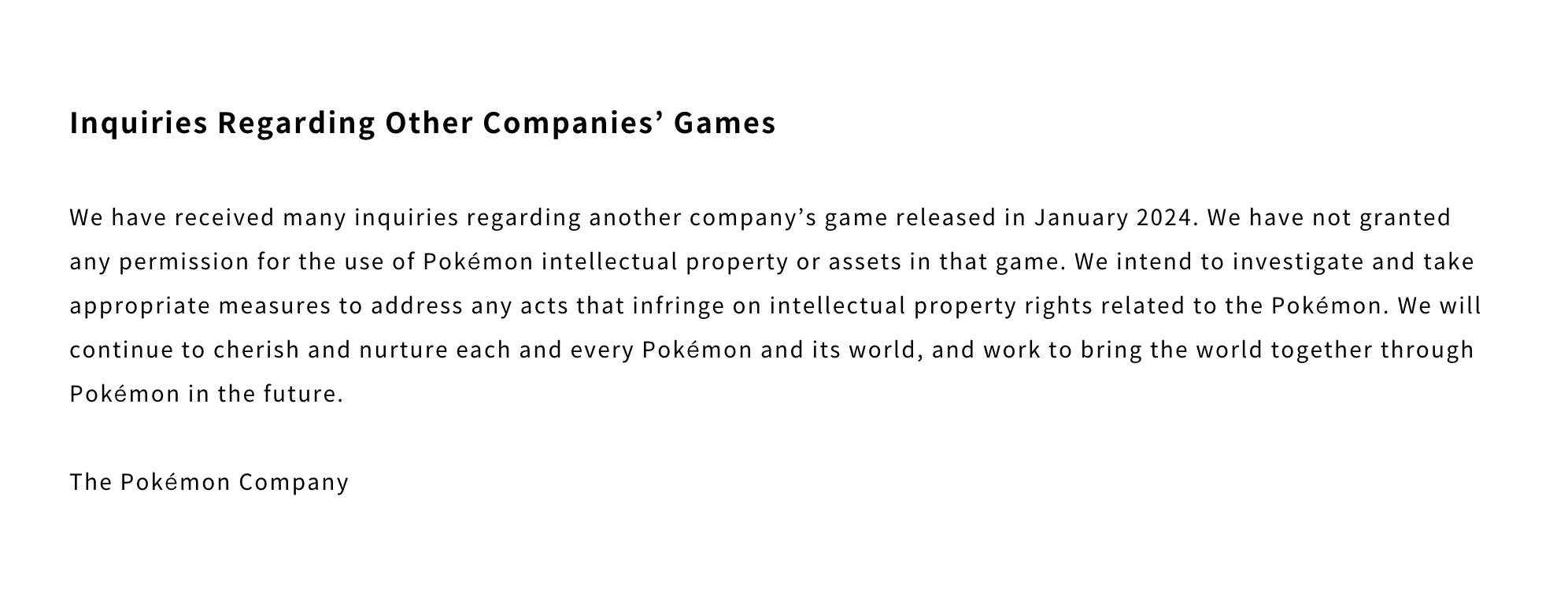

The pals within the game are incredibly similar to Pokemon. Which is strange to see because Nintendo and The Pokemon Company are incredibly protective of their intellectual property. They are incredibly aware of the similarities.

This begs the question, why haven't they done anything yet?

Maybe Japan's decision earlier about being very hands-off on regulating AI within its borders might be playing a role.

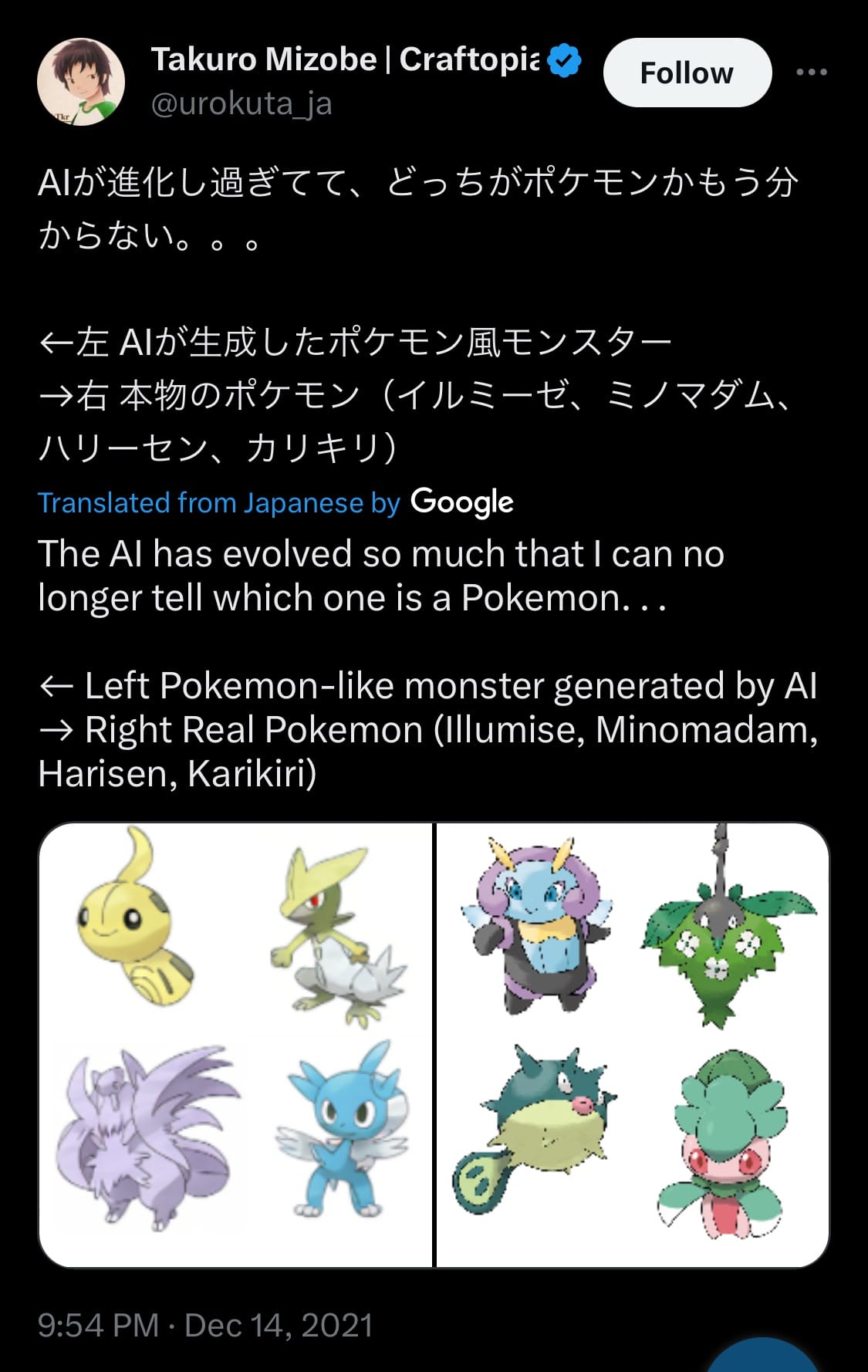

The CEO of Pocketpair, Takuro Mizobe, is obviously taking much inspiration from Pokemon and people have been legally pursued for quite a lot less. It's not like he hasn't been clearly using AI in the past and using it to generate things like look just like Pokemon but aren't.

Stable Cascade

A jump in optimized training of text-to-image models.

By decoupling the text-conditional generation (Stage C) from the decoding to the high-resolution pixel space (Stage A & B), we can allow additional training or finetunes, including ControlNets and LoRAs to be completed singularly on Stage C. This comes with a 16x cost reduction compared to training a similar-sized Stable Diffusion model (as shown in the original paper). Stages A and B can optionally be finetuned for additional control, but this would be comparable to finetuning the VAE in a Stable Diffusion model. For most uses, it will provide minimal additional benefit & we suggest simply training Stage C and using Stages A and B in their original state.