Enhancing Images

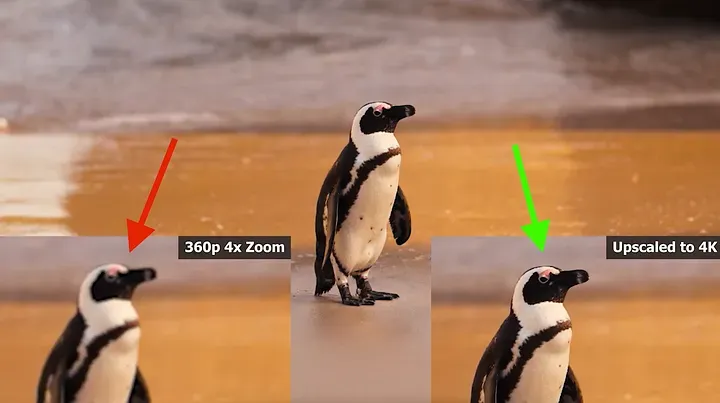

I kept seeing examples of people "upscaling" images in recent years. That's when you use an AI model to resize an image. It looks like this:

It's not just about resizing the image by stretching it. A simple resize would make existing artifacts in the image more noticeable. If the image is blurry, it will remain blurry, pixelated, or show other oddities.

Upscaling, on the other hand, involves the model filling in missing information during resizing based on images from its dataset. This got me thinking about its potential use for data compression or decompression, where smaller images could be sent to save bandwidth.

I came up with a use case: sending images over bandwidth-constrained cell or satellite connections, like from a game camera mounted on a tree near a cabin. I wrote a how-to guide for building one and published it on my GitHub and a few other platforms. To my surprise, it was featured on the front page of a hardware site.

You can go check it out over here: https://www.hackster.io/daniel-legut/a-trail-camera-using-satellites-and-ai-for-animal-research-28b019

My approach focused on finding a new way to study animals using game cameras. These cameras only support cell data and often capture false positives, leading to wasted trips to check images with nothing of interest. This results in underutilized cameras or wasted time, especially in remote areas like Colorado’s mountain valleys or rural farmland.

What’s interesting is that I chose the model I used because it was the most convenient, not necessarily the best. There are better models available, and I was shown ongoing research aimed at improving these upscaling models.

https://spie.org/news/new-neural-framework-enhances-reconstruction-of-high-resolution-images#_=_

It also seems that their research can be reproduced from the following codebase to train a similar model:

This could allow for improved results with what I've tried out so far. It's not entirely clear though.

I tried to show people how metadata can be used to download an image from the hardware based on the information that triggered the camera. It gives a small indication of what’s happening in front of the camera. I refer to this as the Hamming distance.

There's also potential to use multi-modal machine learning models to provide natural language descriptions of what's being seen. Currently, I'm only sending a number as an indicator, but there's no reason a description couldn't be included. Models like the one below could be used for this purpose:

Okay, that's it! I just wanted to share some of these thoughts with you all about what I've been thinking about recently.