LLaDA di LLaDA do, tech progresses on, brah.

It's been a little while since I last hosted a meetup. I'm hosting one again. I thought I'd do a brief summary of the new things people should be aware of when it comes to AI. This isn't a comprehensive overview but mostly just a medley of what's worth keeping in mind.

Reasoning Models

Large Language Models that <think></think>.

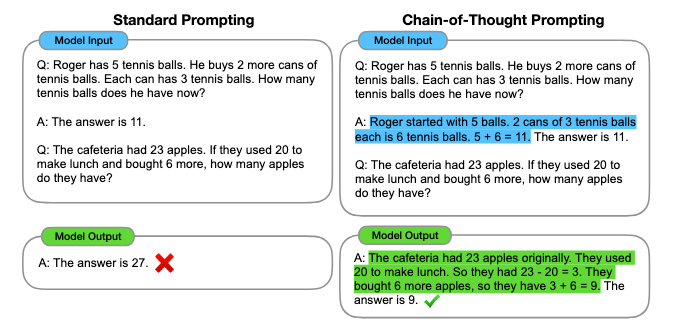

Chain-of-Thought

Chain-of-Thought (CoT) is a way of prompting Large Language Models (LLMs) to get them to break down a problem and "reason" about the request from a user. This technique proved so useful that it has become more popular and has become codified into the models themselves.

Where did Chain-of-Thought come from?

It's likely it originated from this paper done from AI researchers at Google:

Many people who were exploring the development of AI Agents were exploring with different ways of prompting to elicit completion of tasks on behalf of a user. I'd imagine this heavily influenced the creation of reasoning models.

How CoT was added to LLMS:

For the case of Deepseek, in DeepSeek-R1-Zero, they did Reinforcement Learning by doing the following:

- Started with their base AI model

- Set up a simple template that told the AI to first think through its reasoning in a section called

<think>, then give its answer in an<answer>section - Gave the AI lots of problems to solve

- Rewarded the AI when it got the answers right

What happened next was fascinating. Without being shown examples of good reasoning, the AI naturally began to:

- Write longer and more detailed thinking steps

- Check its own work and fix mistakes

- Try alternative approaches when stuck

- Even have "aha moments" where it would realize it made a mistake and start over

While DeepSeek-R1-Zero showed promising results, it had some issues:

- Its reasoning wasn't always easy for humans to read

- It sometimes mixed different languages together

So they created an improved version called DeepSeek-R1:

- First, they collected thousands of examples of good reasoning to give the AI a "cold start" (some initial guidance)

- They fine-tuned their base AI on these examples

- Then they applied reinforcement learning like before, rewarding correct answers

- They collected the best outputs from this AI and used them as new training examples

- Finally, they did another round of reinforcement learning for all types of questions

This multi-stage approach produced an AI that could reason through complex problems while keeping its explanations clear and readable for humans.

The "Aha Moment"

One of the coolest things they observed was that the AI sometimes had what they called an "aha moment" - where it would be working on a problem, realize it made a mistake, and then say something like "Wait, wait. Wait. That's an aha moment I can flag here," and start over with a better approach.

The AI wasn't specifically programmed to do this - it emerged naturally as the AI learned to solve problems more effectively through reinforcement learning.

By the end of training, their model could solve advanced math problems, code complex programs, and answer scientific questions at a level comparable to the best AI systems available, all by learning to think through problems step by step, just like humans do.

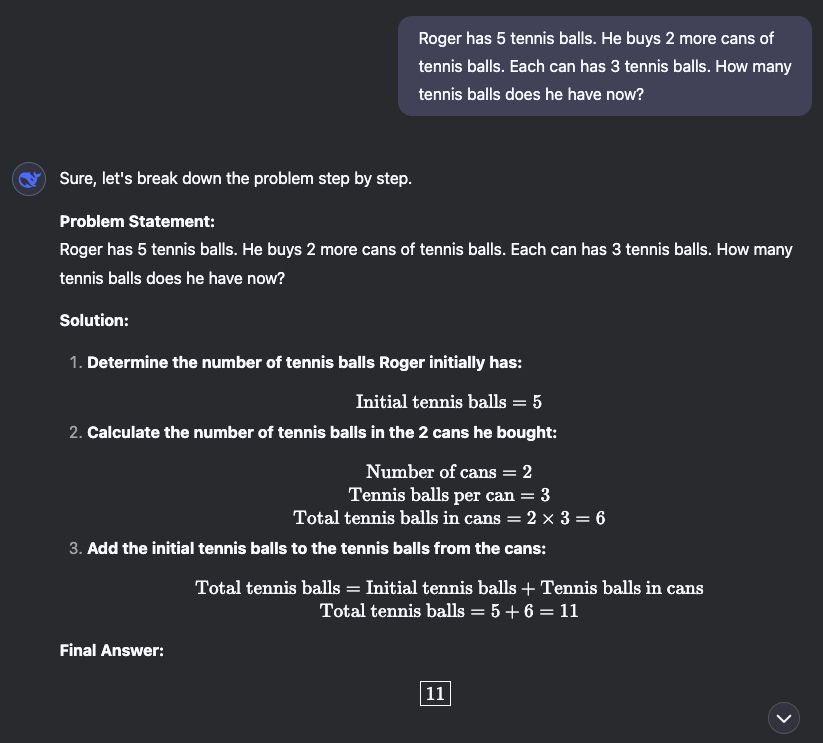

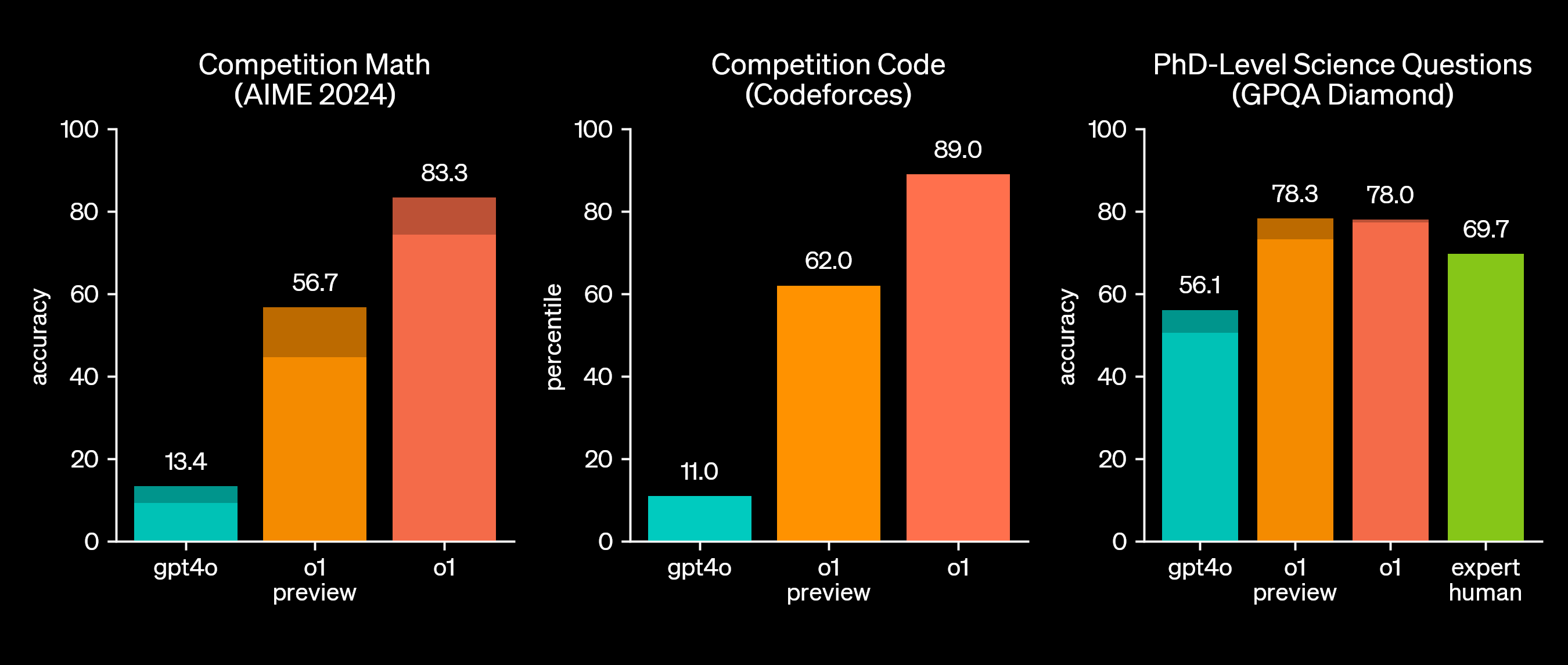

OpenAI O1 - ChatGPT

One of the first popular reasoning models. It appeared in September of 2024 as o1-preview.

Deepseek R1

Towards the end of this past January Deepseek, a research lab based out of China, demonstrated how a reasoning model could be trained with less resources. They released their model Deepseek R1. It demonstrated that less compute was needed on Nvidia GPUs within data centers to get a comparable result as to OpenAI's O1 reasoning model. This shook the confidence of many investors in companies such as Nvidia:

At the time some investors were mentioning something called Jevon's Paradox:

Nvidia seems to have somewhat recovered in the past month:

Open R1 - HuggingFace

The DeepSeek-R1 release leaves open several questions about:

Data collection: How were the reasoning-specific datasets curated?

Model training: No training code was released by DeepSeek, so it is unknown which hyperparameters work best and how they differ across different model families and scales.

Scaling laws: What are the compute and data trade-offs in training reasoning models?

These questions prompted us to launch the Open-R1 project, an initiative to systematically reconstruct DeepSeek-R1’s data and training pipeline, validate its claims, and push the boundaries of open reasoning models. By building Open-R1, we aim to provide transparency on how reinforcement learning can enhance reasoning, share reproducible insights with the open-source community, and create a foundation for future models to leverage these techniques.

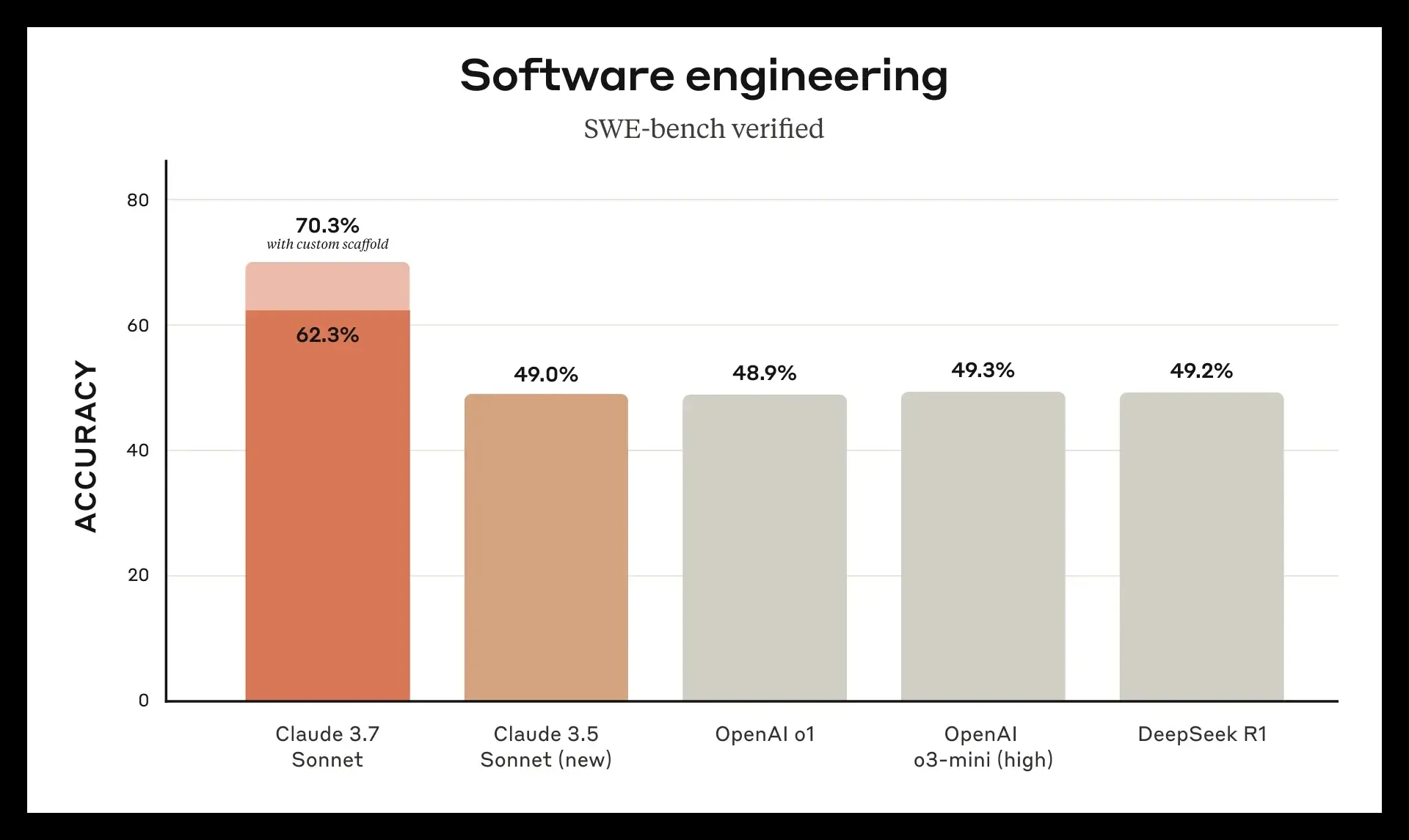

Claude 3.7 - Anthropic

Anthropic just recently released their reasoning models in their 3.7 release this month.

AI Agents

Typing out in plain English what you want your computer do.

I believe the success of reasoning models that were heavily influenced by the work of what people were doing with AI Agents led companies like OpenAI to explore making their own AI agent. They call it OpenAI Operator. I believe only Pro users have access and it costs about $200 a month.

When others see this, they think AI Agents. When I see this, I think Selenium.

Selenium is a powerful automation framework built on a web standard supported by all major browsers. At its core, it allows developers to programmatically control web browsers—essentially letting code interact with websites just as a human would: clicking buttons, filling forms, and extracting information.

If it's not using Selenium, then it's at the very least making use of the web standard that web browsers go by.

I've done some interesting things with Selenium back when I was exploring software for resellers and 2nd hand used goods.

From time to time, I use Selenium to grab data from the internet and I've become quite proficient at it. You can see an example of code I've done here:

The above code makes use of how desktop software developed with Electron has a web browser in it. This meant that I could try controlling it with Selenium. This means other desktop software could be driven by Selenium in much the same way that a web browser can be controlled. There are many very popular applications that were built with Electron and could potentially be controlled by Selenium and by extension an AI agent.

However, in the above example, I was just helping some people I know have a path to move their group off of clubhouse. Platform risk is a real danger and it happens. When you're on the bleeding edge sometimes you get cut. Who remembers Vine? Now it's all about TikTok, or wait? Is it RedNote now? Sometimes people put enormous effort into one platform and don't have a path to get off of it when the platform gets into trouble.

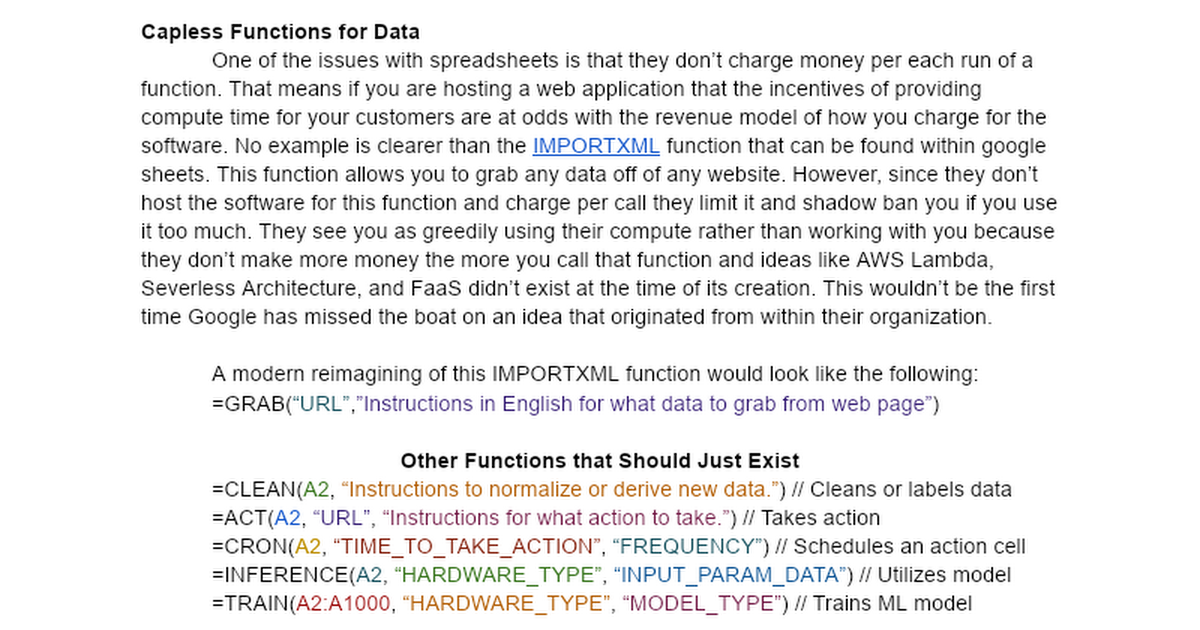

AI Agents in Spreadsheets

A Personal Opinion

=GRAB(“URL”,”Instructions in English for what data to grab from web page.”)

=ACT(A2, “URL”, “Instructions for what action to take at web page.”)

I think people should be able to define and create their AI agents within spreadsheets. It would take only a handful of functions to do so.

If you don't want to read the above document, you can watch a video I put together showing what's missing in spreadsheets.

Code editors with built-in support for LLMs and Reasoning Models are all the rage now, but what about helping people who do their work within spreadsheets?

AI Code Editors

Writing software is easier than it used to be.

Cursor

Windsurf

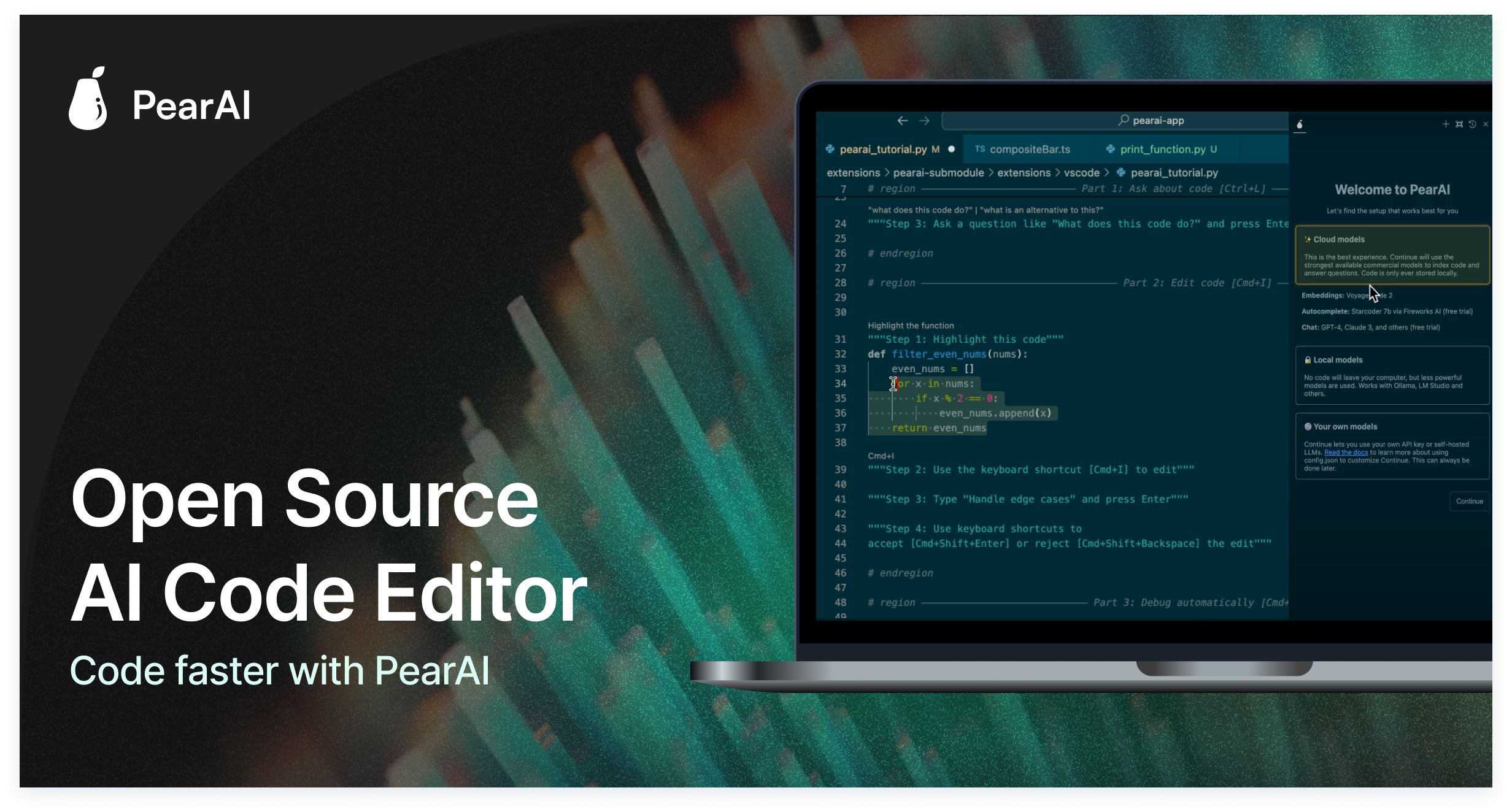

PearAI

PearAI evidently forked an OSS code editor, then later got funding from Y Combinator for it. The lack of attribution to the original authors got them in trouble.

It's common place to rely on open source code, but PearAI got in trouble for not giving credit where credit is due.

It's fairly normal to use other people's software within your own but they crossed a line with how they did it.

PearAI forked the OSS code editor Continue, which was itself funded by YC, and got YC funding for it. Also the editor they forked is itself a fork of VS Code, but is not to be confused with Void Editor, which is a third YC funded VS Code fork with AI features. It's YC funded VS Code forks with AI all the way down. - https://news.ycombinator.com/item?id=41701709

Continue.dev

Void Editor

For reference, VS Code is an editor made by Microsoft that is pretty slick and I use it. It's just a regular code editor and it has a plug-in ecosystem where each plugin is called an extension, which begs the question - why didn't they just make a plugin? Maybe you can't make money off of extensions?

Cline

Ollama

Run the models on your own hardware.

Aider

Get help with writing code with AI within your terminal.

Many of these I have not tried out myself. I mostly do quite a bit of copy and pasting of snippets directly from chat interfaces. I plan on trying out aider because it seems to be the most promising. I mostly don't like being too far away from what the model underneath is doing and an editor kind-of obscures that. So I believe something lightweight is important for me.

Large Language Diffusion Models

LLaDA di LLaDA do, tech progresses on, brah.

A new type of model that combines techniques from both diffusion models and large language models. Diffusion models are normally used to generate images. These LLaDA models are seemingly more performant at a smaller scale when it comes to speed. This is something to keep an eye on.