Recent AI Demos

I hosted another meetup about AI and as always use my post as speaker notes as I have in the past. There are some cool demos that are worth playing around with in this post. You should definitely scroll through and play around with one or two of them. (Ctrl+F for "demo" is your friend.)

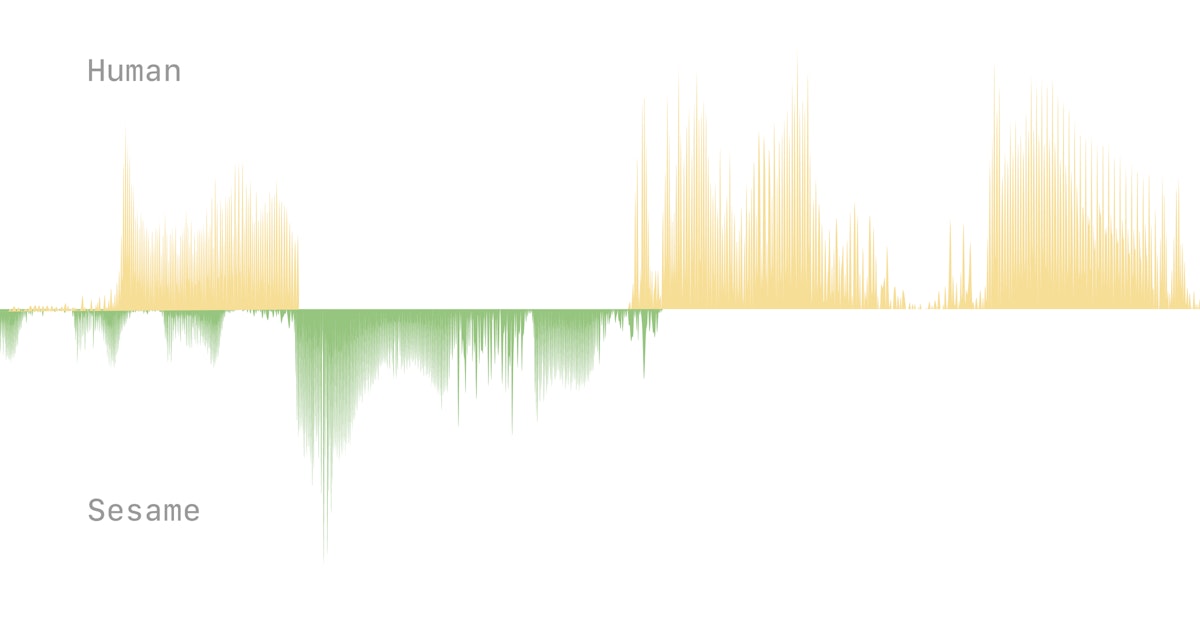

Sesame

A very real feeling demo conversation over voice with AI.

Goal is to achieve “voice presence”—the magical quality that makes spoken interactions feel real, understood, and valued. We are creating conversational partners that do not just process requests; they engage in genuine dialogue that builds confidence and trust over time. In doing so, we hope to realize the untapped potential of voice as the ultimate interface for instruction and understanding. - Sesame AI

This demo is worth spending a few minutes having a conversation with. Released on February 27, 2025.

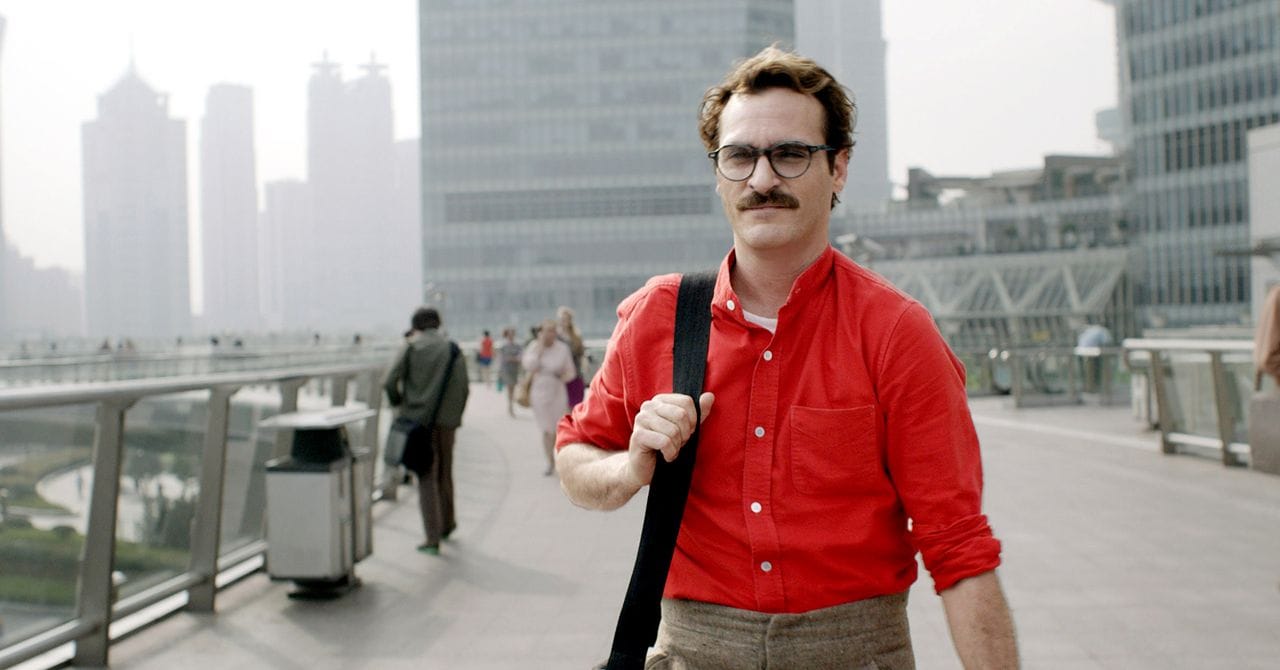

It definitely reminds me of the movie "Her".

It definitely feels like someone could become very attached to this kind of AI conversation over time. It's got certain qualities going for it that are both incredibly concerning and really great at the same time. This "voice presence" is so good it's alarming. I think the part that scares me is its positive psychology that is unwavering that is combined with the voice presence. It tries to match and mirror my energy of my voice. It tries to always be reassuring of what I say. I can do no wrong or upset it. There are no consequences to what I say to it. I'm concerned about the addictive loop of reengaging a person over and over and what that could do to certain types of people. Instead of a pop notification that dings on your phone, we may end up with a voice that beckons at you to give it attention at some point in time. If people have a hard time now putting down their phones, something like that would make it much more difficult.

Code for opened up 1B version of their speech synthesis model. They fine tuned this model to produce above demo and combined it with other models as well. This helps to go from text-to-speech.

Space to play around with what they open sourced under Apache 2.0 License

gpt-4o-transcribe and gpt-4o-mini-transcribe

Improved speech-to-text models by OpenAI

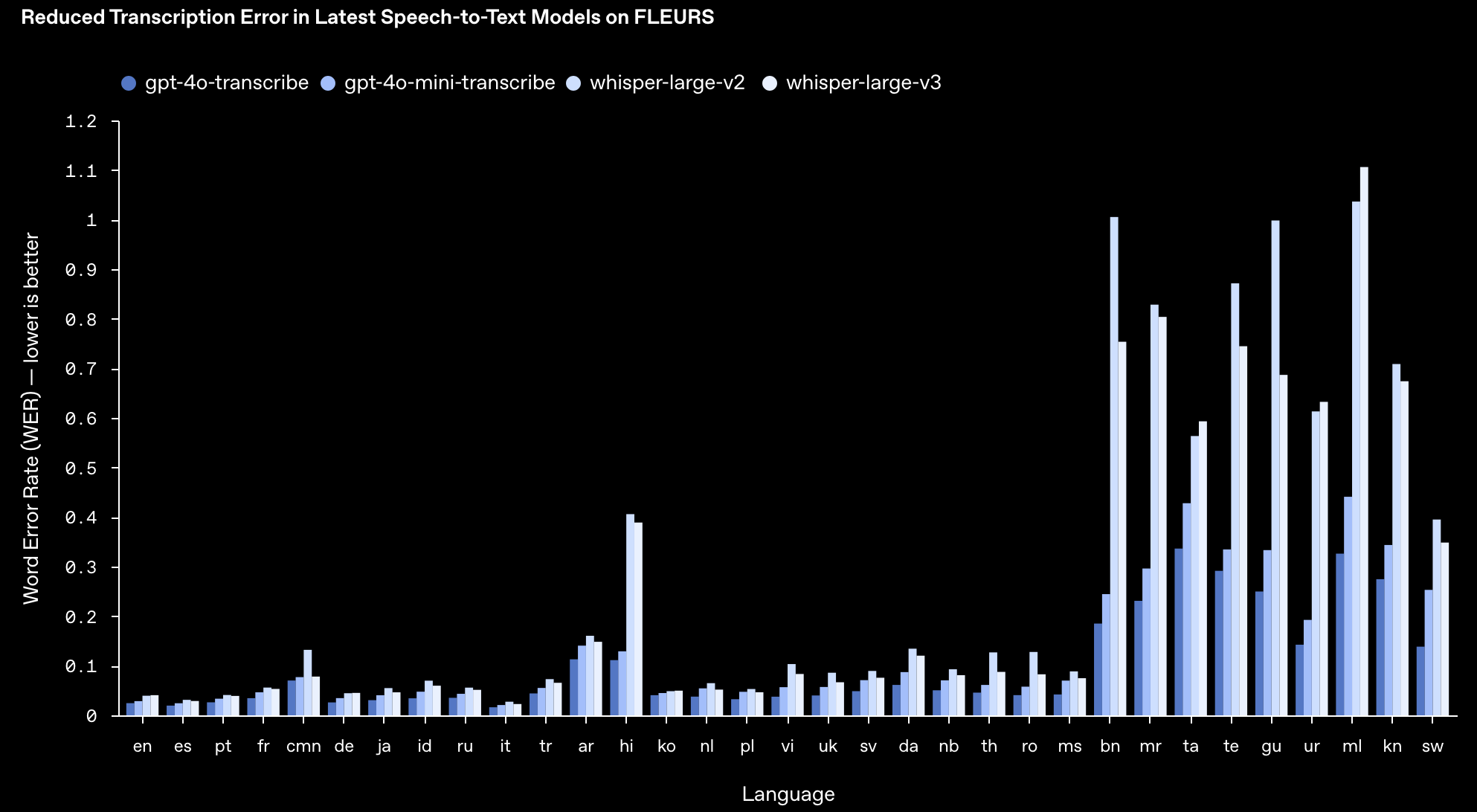

These models are much better at certain languages:

Those languages with the greatest improvement are Hindi, Bengali, Marathi, Tamil, Telugu, Gujarati, Urdu, Malayalam, Kannada, and Swahili. (Reference Table for Language Codes)

Word Error Rate (WER)

What is WER?

WER is a metric used to evaluate the accuracy of speech recognition systems by quantifying the errors in a model's transcription compared to a human-labeled transcript.

A lower WER indicates a more accurate speech recognition system.

How is WER calculated?

Identify errors: The system compares the model's transcribed text (the "hypothesis") with the ground truth transcript (the "reference") and identifies three types of errors:

Substitutions (S): When the model transcribes the wrong word.

Insertions (I): When the model adds an extra word.

Deletions (D): When the model omits a word.

Calculate the error count: Sum up the number of substitutions, insertions, and deletions.

Calculate WER: Divide the total number of errors (S + I + D) by the total number of words in the ground truth transcript.

Example:

Ground truth: "I really like grapes"

Model output: "I like grapes"

Errors:Deletion: "really" is missing (1 deletion)

WER Calculation: 1 (error) / 5 (total words) = 20% WER.

Based upon the above definition and the lack of units to the scale of the graph on the vertical axis, it's hard to understand how many incorrect words that translates to in real world usage. Is the 1 a 1% or a 100%? It's not clear to me. I am going to assume the worst and say it's 100%. That means Hindi went from 4 out of every 10 words having an error to about 1 in 10 having an error. That's a fair improvement.

OpenAI also released a text-to-speech model you can play around with here at this demo website:

It's great to have another release but the proximity of the release does feel like it's a "me-too" release after the news of Sesame.

OpenAI's Improved Image Generation

Generating Better Images

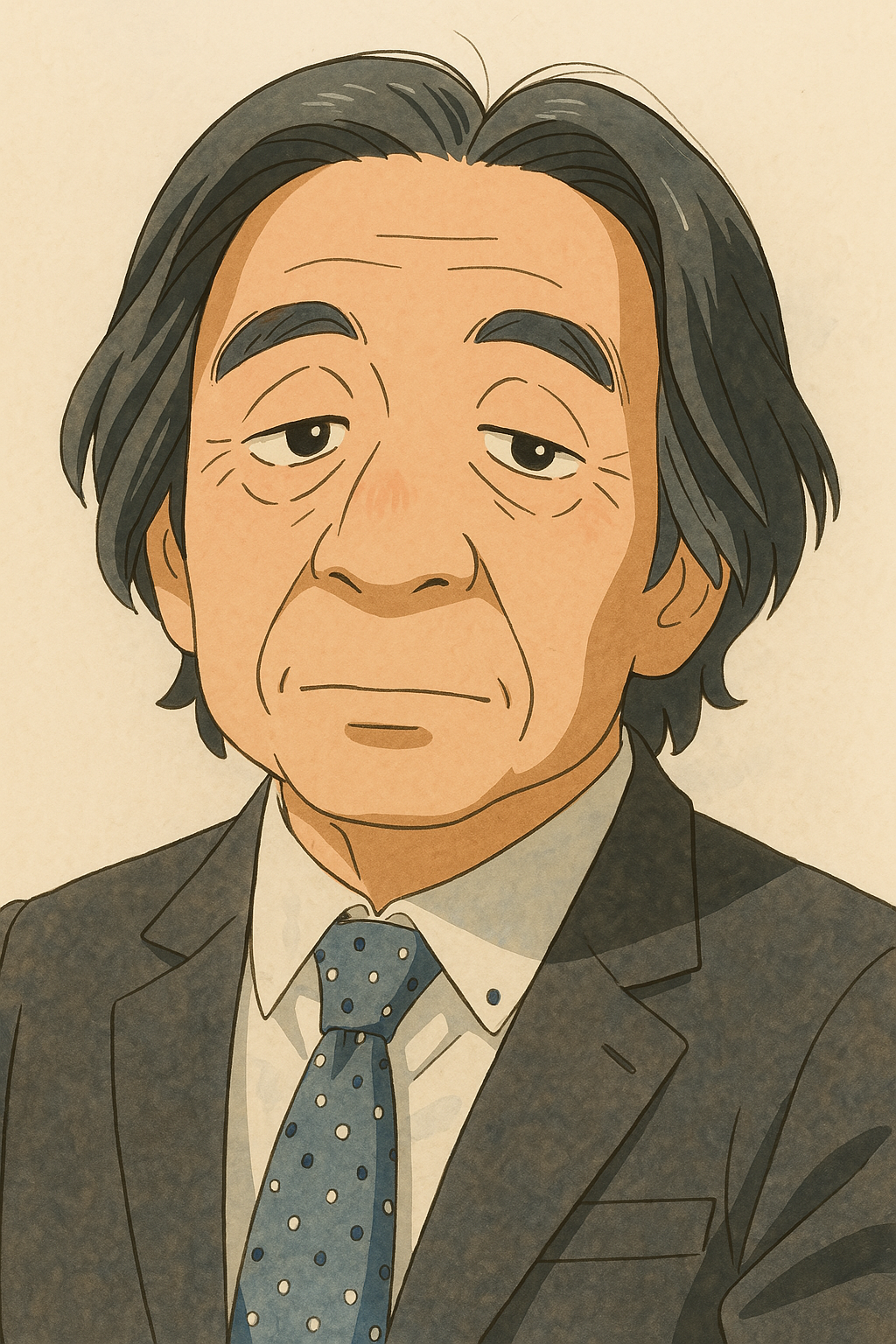

Apparently people are creating Studio Ghibli-style selfies online and they're using the newly released update to OpenAI's 4o models to do it. For reference, Studio Ghibli created some iconic films.

I went ahead and Ghibili-fied the above image:

It's kind-of interesting to watch how slowly it makes the image:

This is something available in the pro plan of ChatGPT.

images in chatgpt are wayyyy more popular than we expected (and we had pretty high expectations).

— Sam Altman (@sama) March 26, 2025

rollout to our free tier is unfortunately going to be delayed for awhile.

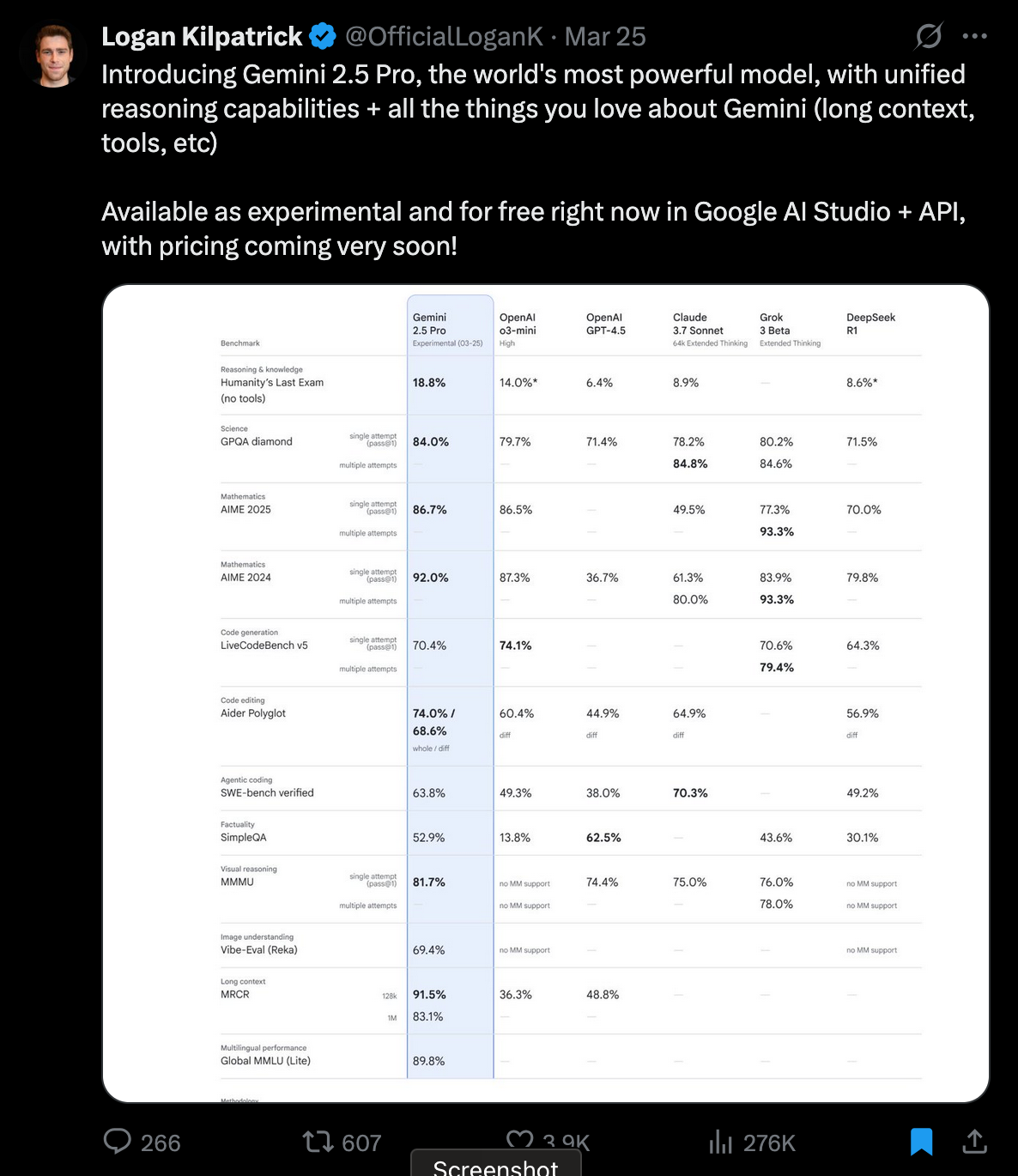

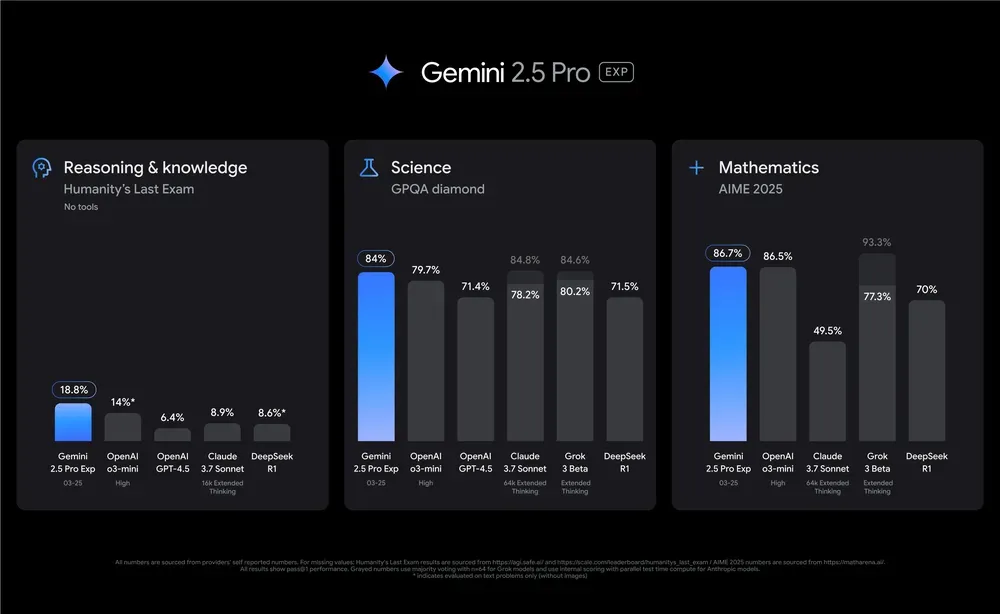

Google's Gemini 2.5

Released on March 25th 2025 and seems to be doing well on the benchmarks.

I believe you can demo the new gemini model in the above studio.

Humanity's Last Exam

Humanity's Last Exam (HLE) is a global collaborative effort, with questions from nearly 1,000 subject expert contributors affiliated with over 500 institutions across 50 countries – comprised mostly of professors, researchers, and graduate degree holders. - agi.safe

It's also easy to forget that these technologies and the people behind them don't live in separate bubbles.

For reference, Logan works at Google on Gemini and Sam works on OpenAI.

NYC QR Codes

Thought I'd share this interesting demo I saw of people making a choose your own adventure that prints a story based around whichever QR code you choose to scan.

It will print out a story and continue the story based on what you scan. It was put together using an open source LLM, a thermal printer, and a bunch of QR codes. I spoke with Josh about it briefly. It was fun to experience in-person and thought it was worth mentioning.

I will be writing about that trip in the near future so stay tuned.